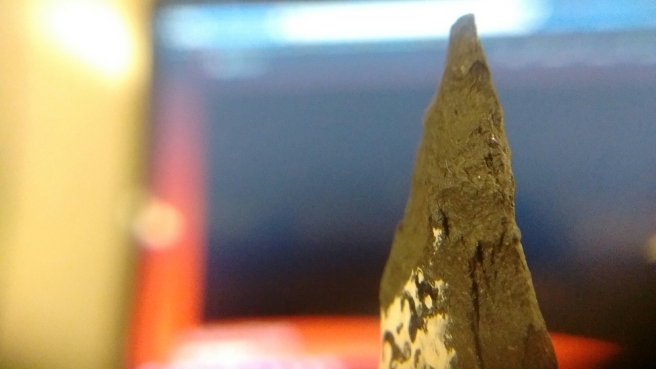

Following my last post on basic meshing, the amphoriskos was left as a solid Lump of Thing. Not ideal digital display purposes, and certainly not for printing (the current be-all-end-all of digitization). So, how does one make a jug more jug-like? I’ll show you how. I’ll be starting with this:

… and working from there. File’s up for download iffn you want to follow along. The first thing you need to do is download and open Meshmixer (link) (henceforth MM, as I am lazy and don’t want to keep typing “Meshmixer”).

Alignment and Scaling

Skipping ahead to the point where you’ve opened your object in MM, you’ll notice that the orientation is probably off-kilter of center. To fix it, you’ll need to go to Edit>Transform. You’ll be faced with a window where you can imput values and an xyz-axis like the one below.

Wobble it around until you’ve got it however you think is right-side-up. Heads up, this could take a while. Once you’re there, click Accept, then go to Edit>Align; if all is well, there should be another window and a bright 3-axes symbol with the y-axis going straight up. Click accept again, and go back to the transform tab. Now comes the fun bit – scaling. I know that the Amphoriskos is about 10cm (100mm) high, and can change the y-scale accordingly with the auto-scaling doing the rest of the work. Because mine initially registered as being fractions of a millimeter large, there was some glitchiness when I expanded it 100-ish times. The glitches were superficial, but if you get them and they bother you, save the file and re-open it; that should clear it up.

You’re now done with setup and can do the real work.

Solidifying and Hollowing

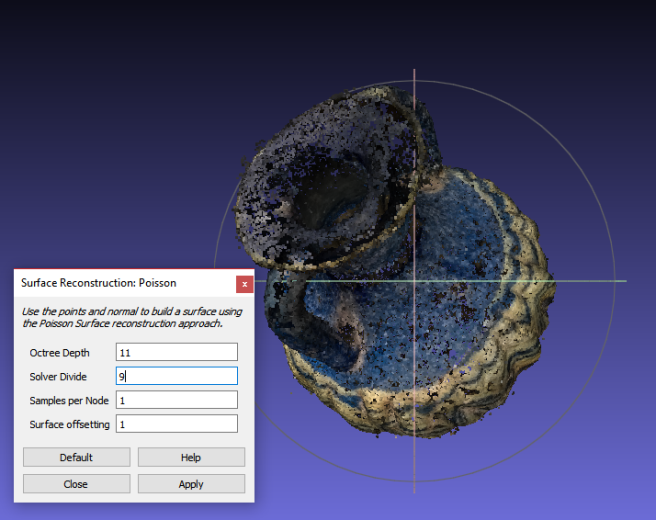

Now, for reasons I can only assume are because MM and Meshlab aren’t 100% compatible*, STL files don’t register as being properly solid and can’t be hollowed out. This is easily fixed by going to Edit>Make Solid and imputing the best values – I just maxed out the quality and let nature take its course.

With my newly solid block, I went to Edit>Hollow and changed Offset distance to 3.25mm, because that seemed right-ish. FYI, printers have a minimum “safe” threshold for borders – generally about 2mm – otherwise they’re in danger of collapsing. Consider that before making the walls thinner than 2mm. To check that the hollowing worked, I Edit>Slice’d my object then Ctrl+Z’d it back to wholeness when I was done.

Opening the Neck

This bit, this bit here, was the most frustrating bit. I went through 5 pieces of software trying to get it right, then went back to MM because it ended up being easiest**. I’m still not entirely happy with it, but it works, so whatever.

Looking back at the hollow half-piece above, you can see that there’s a 3.25mm piece covering the mouth of the neck*** – I wanted that gone. Because jar.

So, what I did was go to Meshmix>Primitives and drag-and-dropped a sphere into the neck hole. After scaling the sphere to roughly neck-hole size, I selected Boolean Subtract from the menu and clicked accept. After it had run, I was left with this:

Not super tidy, and not physically accurate, but pretty good for someone who can’t redo the photos. Almost done!

Sculpting

So, for the last bit of proper work, I smoothed everything out into a close approximation of correctness.

That ended up being:

- Flattening out the divots where that stand had been

- Closing up the hole on the lip

- Filling in and flattening out the irregularities inside the neck

Since I didn’t want the inside of the neck to be too uniform anyway, I had a pretty loose hand with the tools. I ended up having to export it as an STL file and open in it up in Meshlab a couple of times to check for problems, but it all worked out in the end. This bit’s all pretty intuitive, so just fiddle around with it.

Save and export as an STL file.

You are now ready for printing!

Note: Immediately after finishing this post, Meshmixer just. Stopped. Despite my best efforts, and multiple re-installs across multiple computers, it is still too fritzed to use. Because of that and the fact that I made a mistake while editing, there’s no Sketchfab window yet.

Note 2 (April 4, 2017): Problem resolved. It turned out that my model was too small – 10cm high. I guess the first time I left the scaling for last, but started with it pretty much immediately on subsequent edits. Scaling up let normal function resume, and the issue was logged for future updates.

*Probably wrong

**123D + Sculpt: Can’t import files to edit.

TinkerCAD: Can import STL files, but the file exceeded the max number of triangles.

123D Design: Can import files, file didn’t exceed parameters, laptop didn’t have enough power to run it properly.

Blender: Probably does everything anyone could ever want and washes your car, too, but the learning curve is insane. Waaaaayyyyy more work than a hole warrents.

Meshlab: Not made for this, bad things happened. Just no.

123D Design also had some terms of use that I didn’t agree with (Autodesk has the right to use any creations for advertising-type purposes; and it looked like there was some stuff about commerial use [i.e. freeware=you can’t profit from stuff you make], but I didn’t read too much into that).

*** That… doesn’t sound right…

† Speaking from personal experience, you want the supports. Forgetting them will probably result in either a collapsed model or having to MacGyver a fix while trying to not get burned by molten plastic.

This is Apollo Lykeios -because he has a lyre, you see?- and he was the first thing I took pictures of to run through Python Photogrammetry Toolbox (PPT). There were 215 photos, total. From the tips of his toes, to the draping under his hand, to the curls in his hair, I had it all.

This is Apollo Lykeios -because he has a lyre, you see?- and he was the first thing I took pictures of to run through Python Photogrammetry Toolbox (PPT). There were 215 photos, total. From the tips of his toes, to the draping under his hand, to the curls in his hair, I had it all.